Pleased to announce the successful completion of a tiny computing platform for high-speed, low-power, embedded computing. In terms of size, cost, and carbon footprint, the kernel clocks in at 372 bytes The full runtime including kernel is 1,218 bytes and provides DSL (domain specific language) capability for application specific computing. Not quite bare metal as we target usage with a keyboard and screen and rely on bare-bones BIOS drivers for this. Thumbnail details below.

What has been achieved:

- 372 byte machine coded kernel (yes, this is bytes, not KB, MB or GB). This is small enough to fit into the smallest ROMs, while still leaving plenty of space for application RAM.

- 40 kernel level instructions provide the full expressibility of C for application code.

- Compressed application code runs through a threaded design.

- High speed code execution (as fast or faster than C) with native code compilation to chip machine code.

- A further 846 bytes of core runtime instructions, suitable for RAM loading, provides Domain specific language (DSL) capability for language expansion with the full expansion capability of a Forth or a Lisp.

- A virtual machine language design organized around 2 stacks simplifies portability between chips without requiring any other layer. A single afternoon mapping the 40 kernel instructions between chips and adapting the kernel code is all that is required to port to a new chip.

- Lightweight development stack with compiler/assembler for cross-compilation

- User interface is through keyboard and screen.

More details to follow.

If you haven’t done so already, you may want to start by reading the Preface to Knowledge Engineering & Emerging Technologies.

January 31st, 2024 (4th ed)

The aim of this article is to encourage you to take an end-to-end perspective in your designs, seeking to minimize the overall complexity of your system, of the hardware-software-user combination. To achieve this, it is helpful to understand how computing, and within that, how the notions of the sacred and the profane have evolved over the past 60 or so years.

The following remarks set out a ‘true north’ perspective for this conversation:

- “We are reaching the stage of development where each new generation of participants is unaware both of their overall technological ancestry and the history of the development of their speciality, and have no past to build upon.” – J.A.N. Lee, [Lee, 1996, p.54].

- “Any [one] can make things bigger, more complex. It takes a touch of genius, and a lot of courage, to move in the opposite direction.” – Ernst F. Schumacher, 1973, from “Small is Beautiful: A Study of Economics As If People Mattered”.

- “The goal [is] simple: to minimize the complexity of the hardware-software combination. [Apart from] some lip service perhaps, no-one is trying to minimize the complexity of anything and that is of great concern to me.” – Chuck Moore, [Moore, 1999] (For a succinct introduction to Chuck Moore’s minimalism, see Less is Moore by Sam Gentle, [Gentle, 2015]

- “The arc of change is long, but it bends towards simplicity”, paraphrasing Martin Luther King.

The discussion requires a familiarity with lower-level computing, i.e. computing that is close to the underlying hardware. If you already have some familiarity with this, you can jump straight in to section 2. For all backgrounds, the discussions in the Interlude (section 4) make for especially enlightening reading. Whether you find yourself in violent agreement or disagreement, your perspective is welcomed in the comments!

Between complexity and simplicity, progress, and new layers of abstraction. Continue reading this article…

By Assad Ebrahim, on January 1st, 2024 (6,747 views) |

Topic: Education, Technology

2nd ed. Jan 2024 (with standalone chapters), 1st. ed. 2005

Overview

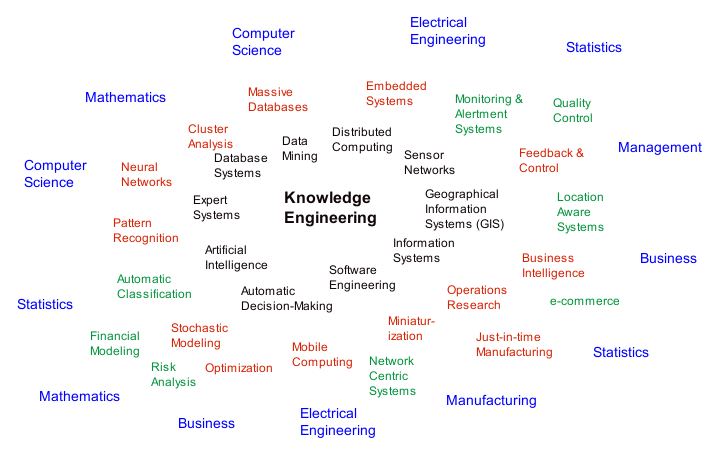

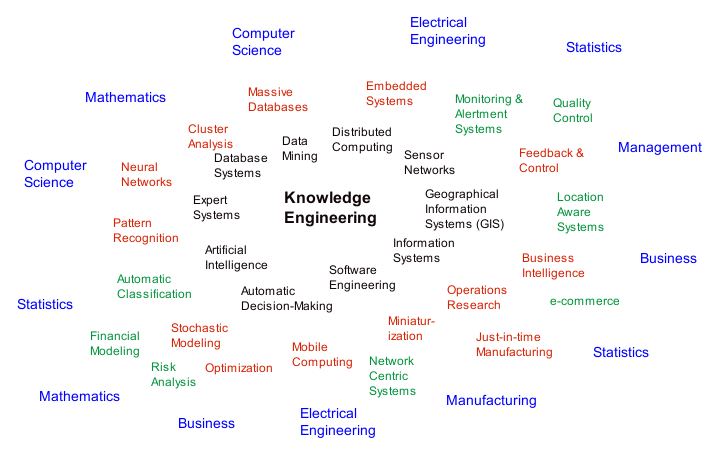

In the intersection between Mathematics, Modern Statistics, Machine Learning & Data Science, Electrical Engineering & Sensors, Computer Science, and Software Engineering, is a rapidly accelerating area of activity concerned with the real-time acquisition of rich data, its near real-time analysis and interpretation, and subsequent use in high quality decision-making with automatic adjustment and intelligent response. These advances are enabled by the development of small, energy efficient microprocessors coupled with low-cost off-the-shelf sensors, many with integrated wireless communication and geo-positional awareness, communicating with massive high-speed databases. For teams able to bridge the disciplines involved, the potential for economically productive application is limitless.

Traditional science and technology disciplines are in the outermost ring, often isolated from each other. The result of their integration is driving the areas out of which a large portion of technology in the coming decades is likely to appear. Continue reading this article…

By Assad Ebrahim, on March 14th, 2023 (9,026 views) |

Topic: Maths--General Interest, Technology

Updated March 21, 2023, following two bank collapses in the US and the collapse of Credit Suisse in Europe. First published July 5, 2010, two years after the start of the Great Recession.

Mathematical Finance is an area of applied mathematics that has seen explosive growth over the past 30 years as the U.S. financial markets became deregulated during the late 1980s and 1990s. From a technical point of view, Mathematical Finance uses a broad range of sophisticated mathematics for its financial models: from the partial differential equations of mathematical physics, to stochastic calculus, probabilistic modeling, mathematical optimization, statistics, and numerical methods. The practical implementation of trading strategies based upon these mathematical models requires designing efficient algorithms as well as exploiting the state-of-the-art in software engineering (real-time and embedded development, low latency network programming) and in computing hardware (FPGAs, GPUs, and parallel and distributed processing). Taken together, the technical aspects of mathematical finance and the software/hardware aspect of financial engineering lie at the intersection of business, economics, mathematics, computer science, physics, and electrical engineering. For the technologically inclined, there are ample opportunities to contribute.

The ideas of financial mathematics are at the heart of the global free market capitalist system that is in place across most of the world today and affects not only economics but also politics and society. It is worthwhile to understand the essential mechanics of the modern financial world and how it has arisen, regardless of whether we agree with its principles or with the impact of the financial system on social structures. In this article, I’ll motivate the origins of financial mathematics through a simplified account of the rise of the modern financial marketplace. (Update: 2012. A highly recommended graphic novel account is Economix, by Michael Goodwin, which was published in 2012. His website – linked above – has some great context explaining developments since 2012 in a similar highly accessible style!)

Separate from the technical content, there is a kernel of core financial ideas that every literate citizen should understand. In 1999, Clinton and Congress removed the last remaining restraints on the financial industry that were put in place by Roosevelt in the famous Glass-Steagall Act of the 1930s specifically to address the root causes of the banking crisis of 1929 and the subsequent Great Depression. Ten years after Glass-Steagall was repealed, the Bear Stearns/Lehmann Brothers collapse brought on the financial crisis of 2008 and the Great Recession whose consequences were a decade of stagnation in western economies, the acceleration of income inequality, and a resurgences of nationalism across UK, Europe, and the US. The severity of the crisis led to a limited package of restraints being placed on the US financial system in 2010 through the Dodd-Frank act, but this was repealed by Trump in 2018, leaving the financial market again largely deregulated.

Update 2023: Five years on from this, we see in March 2023 the start of US bank failures beginning with the Silicon Valley Bank (SVB), the 16th largest bank in the U.S., and the collapse in just one week of two US banks that together hold over $300 billion in total assets which is more than the largest single US bank failure history in 2008 (Washington Mutual). The contagion is spreading to Europe with Credit Suisse impacted, the 2nd largest bank in Switzerland and one of the top 8 global investment banks of US/UK/EU. Housing market decline, bank failures, and increasing unemployment mark the three signs of a coming recession – the bank failures have completed the set. (See Recommended Reading).

Continue reading this article…

TinyPhoto is a small rotating photobook embedded graphics project that uses the low-power ATtiny85 microcontroller (3mA) and a 128×64 pixel OLED display (c.5-10mA typical, 15mA max). This combination can deliver at least 20 hrs of continuous play on a 3V coin cell battery (225mAh capacity). TinyPhoto can be readily built from a handful of through-hole electronic components (12 parts, £5) organized to fit onto a 3cm x 7cm single-sided prototype PCB. The embedded software is c.150 lines of C code and uses less than 1,300 bytes of on-chip memory. TinyPhoto rotates through five user-selectable images using a total of 4,900 bytes (yes, bytes!) stored in the on-chip flash RAM. The setup produces crisp photos on the OLED display with a real-time display rate that is instantaneous to the human eye with the Tiny85 boosted to run at 8MHz. A custom device driver (200 bytes) sets up the OLED screen and enables pixel-by-pixel display. Custom Forth code converts a 0-1 color depth image into a byte-stream that can be written to the onboard flash for rapid display. It is a reminder of what can be accomplished with low-fat computing…

The magic, of course, is in the software. This article describes how this was done, and the software that enables it. Checkout the TinyPhoto review on Hackaday!

Tiny Photo – 3cm x 7cm photo viewer powered by ATTiny85 8-bit microcontroller sending pixel level image data to OLED display (128×64 pixels), powered by 3V coin cell battery. Cycles through 5 images stored in 5kB of on-chip Flash RAM. (Note, this is 1 million times less memory than on a Windows PC with 8GB RAM). The magic is in the software. Continue reading this article…

This article explains how to use the Arduino toolchain to program microcontrollers from the Arduino IDE using their bootloaders, and also burning bootloaders directly onto bare microcontroller chips. It covers developing interactively with Forth (rapid prototyping), and moving your creations from a development board (Nano, Uno) to a standalone, low-cost, low-power, small footprint chip such as the ATMega328P or ATTiny85 or ATTiny84. Each of these microcontrollers is powerful, inexpensive, and allows using 3V batteries directly without the need to boost voltage to 5V. Additionally, we describe how to build an inexpensive (under £5), standalone 3-chip Atmel AVR universal bootloading programmer that you can use to program all of the chips above.

Continue reading this article…

Voice controlled hardware requires four capabilities: (1) vocal response to trigger events (sensors/calculations-to-brain), (2) speech generation (brain-to-mouth), (3) speech recognition (ear-to-brain), and (4) speech understanding (brain-to-database, aka learning). These capabilities can increasingly be implemented using off-the-shelf modules, due to progress in advanced low-cost silicon capable of digital signal processing (DSP) and statistical learning/machine learning/AI.

In this article we look at the value chain involved in building voice control into hardware. We cover highlights in the history of artificial speech. And we show how to convert an ordinary sensor into a talking sensor for less than £5. We demonstrate this by building a Talking Passive Infra-Red (PIR) motion sensor deployed as part of an April Fool’s Day prank (jump to the design video and demonstration video).

The same design pattern can be used to create any talking sensor, with applications abounding around home, school, work, shop, factory, industrial site, mass-transit, public space, or interactive art/engineering/museum display.

Bringing Junk Model Robots to life with Talking Motion Sensors (April Fools Prank, 2021) Continue reading this article…

Abstract This brief note explores the use of fuzzy classifiers, with membership functions chosen using a statistical heuristic (quantile statistics), to monitor time-series metrics. The time series can arise from environmental measurements, industrial process control data, or sensor system outputs. We demonstrate implementation using the R language on an example dataset (ozone levels in New York City). Click here to skip straight to the coded solution), or read on for the discussion.

Fuzzy classification into 5 classes using p10 and p90 levels to achieve an 80-20 rule in the outermost classes and graded class membership in the inner three classes. Comparison with crisp classifier using the same 80-20 rule is shown in the bottom panel of the figure. Continue reading this article…

The Emerging Technology page has moved here

Curated Shorts

The Computing page has moved here.

Curated Shorts

|

Stats: 1,066,417 article views since 2010 (March update)

Dear Readers: Welcome to the conversation! We publish long-form pieces as well as a curated collection of spotlighted articles covering a broader range of topics. Notifications for new long-form articles are through the feeds (you can join below). We love hearing from you. Feel free to leave your thoughts in comments, or use the contact information to reach us!

|