(Statistics and Data Mining II)

Automated decision problems are frequently encountered in statistical data processing and data mining. An heuristic filter or heuristic classifier typically has a limited set of input data from which to arrive at a set of conclusions and make a decision: REJECT, ACCEPT, or UNDETERMINED. In such cases, pre-processing the input data before applying the heuristic classifier can substantially enhance the performance of the decision system.

In this article, I’ll motivate the use of a radar-tracking algorithm to improve the performance of automated decision making and statistical estimation in data processing. I will illustrate using the website visitation statistics problem.

In a previous article, I discussed the limitations of generically processed, or “canned”, website visitation statistics. Remedying these deficiencies requires using customized data filters that address seven challenges.

The first layer of filtering concerns the discrimination between robotic and human visitors. Human visitors are the signal that is desired to be measured; robotic visitors are the “noise” that needs to be filtered out in order to get meaningful visitation estimates.

Viewed from this perspective, the website visitation problem will be seen to be similar to the challenges facing a TWS (track-while-scan) radar, tracking sonar, or general sensor-based tracking system, and thus, to be amenable to a similar solution approach.

By modelling the web server as a sensor and HTTP requests as sensor returns, we can re-formulate the website visitation statistics problem as a sensor tracking and classification problem. Improved estimates can then be obtained by applying filtering techniques from radar and sonar processing.

A little background on the general tracking and classification problem is helpful.

The Sensor-Based Tracking Problem

Sensor-based tracking problems are common in a variety of applications. In air defense, the goal may be target prosecution using radar sensing; in undersea surveillance, it may be intruder detection using sonar; and in population ecology or traffic monitoring, the goal may be obtaining accurate counts of the geographic use of a resource or route, using a combination of IR sensors, automated cameras, or ground-based pressure sensors.

Regardless of the particular application, tracking problems are typically handled by a processing chain whose elements can be divided into three principal stages:

- detection, which identifies the individual sensor returns that indicate a target’s presence;

- tracking, which is a matching problem that must decide which detections ought to be grouped together as a single track entity; and

- classification, which is a decision problem that takes completed tracks as inputs, extracts attributes about these tracks, and uses aggregate statistics to decide which class the target, represented by a given track, should belong to.

In most solution architectures, detection precedes tracking, but classification can occur before, during, or after tracking. In addition, filters (partial classifiers) can be used to improve a specific portion of the processing chain.

Challenges Facing Sensor-Based Tracking

Most sensor-based tracking problems face a number of common challenges. I’ll illustrate with a radar example — though the concepts apply equally well to sonar tracking.

A typical track-while-scan (TWS) radar system gets a discrete (temporally separated) sequence of detections (“hits”) on a given target. Each detection is of a finite, typically quite short, duration. From each detection is extracted a given collection of attributes about that hit. The attributes typically include detection time, position, and reflected intensity, as well as various statistical moments and other mathematical features of the detection. The goal of these radar detections is typically to identify (detect and classify) the target of interest, and its trajectory. By successfully tracking the target in motion, knowledge of the target can usually be substantially improved, since repeated detections of the moving target provide a greater volume of information and allow aggregate, track-based, statistics to also be used.

But effective radar tracking requires overcoming several non-trivial challenges, two of which are also relevant to our web-server statistics problem.

The first challenge is to maintain track integrity despite gaps in the incoming data stream. These gaps can be caused by changes in a target’s aspect to the radar head, for example, or by obstruction of the EM signal due to cloud cover, weather patterns, or intermediate objects. As a consequence, one or more radar interrogations (“echos”) may be lost from the sequence of incoming data — with knock-on effects down the processing chain, depending on how each processing module handles “gap-py” data.

The second challenge is to correctly track all targets in the radar’s field of view (FOV). The problem here is that there is typically no a priori knowledge of the total number of targets that are in the radar’s FOV at any given time. One has only the stream of sensor detections (echo returns), their detection times, and their extracted attributes from which to make decisions.

To respond to the second challenge, most radar and sonar tracking processors — indeed most sensor-based tracking processors — evolve multiple “tracks-in-progress” simultaneously. Each track is assumed to represent the behavior of at least one of the unknown number of targets in the field of view. These tracks are typically maintained in real-time.

The first challenge, that of track segmentation, is typically addressed by introducing the concept of tracking gates to account either for gaps in the data or for track crossings. Additional filters handle track segmentation due to clutter (scattering interference).

With this as background, we turn now to our headlined problem.

Behavioral Classification, Robot Filters, and the Need for Data Pre-Processing

Recall that the goal of the web-site visitation statistics problem is an accurate count of human readers, as distinguished from robotic traffic or accidental human visitors.

Thus, two disjoint visitor classes arise naturally: robot and human. Human visitors are the signal of interest; robot visitors are the “noise” to be filtered out. Now, members associated with each of these two visitor classes are observed to exhibit1 consistently distinctive behavioral characteristics that can be quantified.2 It is this problem structure that makes behavioral classification an effective heuristic filter.

As we have seen in the radar example above, the performance of behavioral classification depends largely on the integrity of the visit data provided for analysis. Specifically, the crucial element is how much of the characteristic behavior of each class member is contained in the visit data. We therefore reformulate our web-server problem in order to exploit track processing algorithms that address precisely this issue.

Modelling the Server-Side Visitation Statistics Problem as a Sensor Tracking Problem

By taking a sensor systems point of view, we can reduce the website visitation statistics problem to a sensor tracking and classification problem.

Let the web-server itself be considered as a detection sensor. The individual sensor detections are HTTP requests (“hits”). For each hit (HTTP request), the web-server records a collection of attributes including a timestamp, the visitor’s (remote host) IP address, requested URL, referring URL, and various other fields. The server log is, thus, a record of the real-time data stream, along with additional attributes of each detection.

A visitor “track” shall then consist of all of the HTTP requests associated with a hypothesized web-site visit. Note: the emphasis must be on hypothesized because there is no crisp definition as to what constitutes a complete visit, i.e. when a visit is judged to have ended and when the next one (by the same visitor) has started. These and other similar parameters of the problem / model must be selected in a way that is statistically reasonable and that fits the application logic.

Once these modelling parameters have been selected, the model is complete.

The count that we’re after, namely web visitations by a human reader, now becomes a count of the tracks that remain after various heuristic filters and statistical tests have rejected all tracks having sufficiently ROBOT-like characteristics.

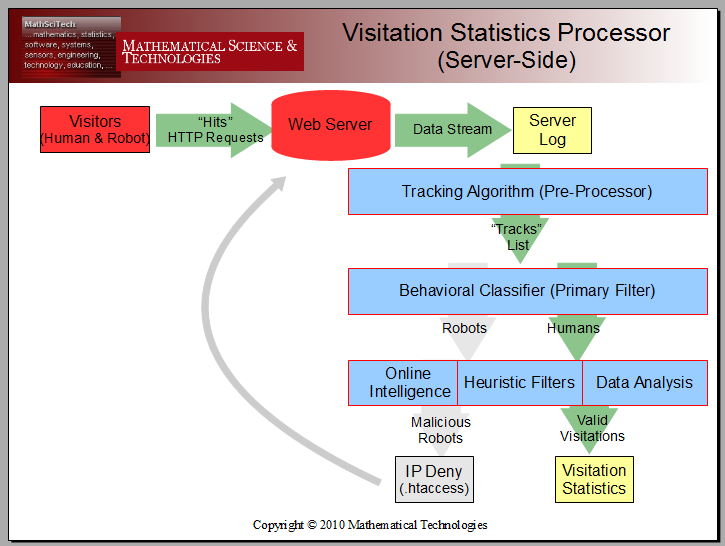

The block diagram below shows the solution design.

Block Diagram of a Server-Side Statistics Processing Engine using a Tracking Pre-Processor, Behavioral Classifier, and Various Heuristic Filters

Notice the place of the track forming pre-processor as the first (upstream) filtering component. The downstream processing chain commences with a behavioral classifier and includes various other heuristic filters.

With this design, the quality of the final count can be clearly seen to depend on three components:

- the characteristics of the web-server-as-sensor,

- the performance of the track-forming processor, and

- the performance of the various classifiers used as rejection filters.

The Web-Server as Sensor

For web servers built on the LAMP stack (Linux, Apache, MySQL, and PHP), the web-server in question is Apache, and the raw data are the Apache server logs.

The challenges for track-forming algorithms that are present in radar and sonar tracking (namely multiple simultaneous targets and “gap-py” data), also appear in the web-server-as-sensor model. Their appearance is due to the particular characteristics of web-servers and the nature of visitor behavior, as explained below.

Multiple simultaneous targets: The need for handling multiple simultaneous targets arises because of the capability of the Apache web-server (and all modern competitors) to handle simultaneous connections. This means that the web-server can service HTTP requests from a large number of visitors simultaneously. Although there is a physical limit to the number of simultaneous connections that the Apache web-server allows, the number is large enough that we may consider it to be unbounded for the purpose of our model.

Gaps in the Data: The presence of gaps in the web-server data stream is a corollary of the ability of web-servers to handle simultaneous connections, and the asynchronous nature of visitor arrival times and browsing behavior. Visitors, whether human or robotic, can arrive or depart at any time, and can spend an unconstrained amount of time on a particular page before making an additional request, or departing entirely.3

These two characteristics of web-servers and browsing behavior mean that the information about an hypothetical visitor’s visit is typically spread across time and interspersed with data from an unknown number of other overlapping visits.

We have seen that track segmentation, whether due to gaps in the data, a weak matching algorithm, or the presence of noise (clutter), increases the likelihood of mis-classification. Thus, processing the server logs directly, as a time-series of sensor returns, makes visitor tracks vulnerable to premature segmentation, with corresponding reduction in tracking performance.

Requirements for a Track-Forming Processor

What is required, then, is a track-forming pre-processor whose sole purpose is to ensure the integrity of tracks (i.e. guard against track segmentation), and to maximise their (legitimate) length.

A good design will see a track-forming processor that receives a web-server’s raw data stream as input, and emits a high quality tracks-list as output. The tracks-list is then available to any number of follow-on classifiers that can exploit track level features to provide more accurate human visitation estimates.

What remains is the software implementation, which the next article takes up.

Summary

Obtaining meaningful visitor statistics is a non-trivial problem in the face of the seven challenges to using raw or generically processed statistics. The metrics that we are interested in are those associated with only the interested human readers of the site, which requires filtering out all autonomous network traffic (robots) and incidental human visitors.

A powerful class of heuristic filters, capable of accomplishing this, are behavioral classifiers. These are useful when behavior is distinct between elements of different classes. This is case in the web statistics problem, where the two main classes are human and robot, each of which exhibits distinct characteristic behavioral attributes.

But in order to work well, behavioral classifiers require an input that contains information on each visitor and that captures all of the significant behavior associated with that visit. Since modern web servers can handle multiple simultaneous visitors, and since visitor behavior is asynchronous, obtaining such input for a behavioral classifier requires a pre-processing algorithm.

Enter an insight: by modelling the problem as a sensor detection and classification problem, we can transform the web-site visitor statistics problem into a sensor tracking problem, and use techniques from radar and sonar signal processing

This is what we have done, and the resulting server-side design is shown in the block diagram above. Specifically, our server-side solution is built upon the raw server logs from the Apache web server. A collection of heuristic filters, beginning with a behavioral classifier, work together to provide improved estimates of the interested human traffic.

The next article will look at the first filter block of the design: the track forming pre-processor. Stay tuned!

In the next article of this series, we’ll discuss the software implementation of a tracking algorithm, both the general pattern, and its specialization into a pre-processor for the server-side statistics processing engine. Stay tuned!

References and Related Reading

- Ebrahim, A.K., Part 1: The Analysis of Visitor Statistics: Data-Mining-in-the-Small

- Skolnik, M. I., Introduction to Radar Systems. 2nd ed. New York: McGraw-Hill, 1980

- United States Naval Academy, Systems Engineering Department, Fundamentals of Naval Weapon Systems, Ch.6: Track-While-Scan Concepts

- Perez-Jacome, J., Automatic Target Recognition Systems, 1996,

In the next article of this series, we’ll discuss the software implementation of a tracking algorithm, both the general pattern, and its specialization into a pre-processor for the server-side statistics processing engine. Stay tuned!

Footnotes

- The goal of a data exploration / data mining phase is to discover the useful behavioral characteristics that are to form the kernel of the various classifiers and heuristic filters to be deployed against the problem. One way this is done is by searching for and extracting candidate features that segment the data into clusters whose members can be verified (statistically) to fall into known and useful classes. ↩

- As a simple example: a reasonably effective behavioral classification kernel can be formed using as few as three attributes: browsing speed, query frequency, and traversal path. ↩

- In particular, departures (and therefore the closure or expiration of a given track) must be inferred carefully, since simply following an external web-link does not preclude a return visit by the same visitor to continue reading the same or a different article within the site. ↩

Leave a Reply